This post originally appeared on the Finn AI blog, which is now part of Glia.

By Gartner’s estimate, there are 1,000 to 1,500 conversational AI vendors in the market in 2019. One large subcategory is chatbot-building platform vendors that allow customers to create a DIY chatbot. The most commonly-provided feature enables customers to define intents and map them to responses.

In use cases where you want your chatbot to do many different simple things, this type of platform may be sufficient. Think of your basic home automation bot where questions like, “Turn on living room TV,” “Play party music,” and “What’s the weather like today?” are quite different from each other. Another example is a Wikipedia-style answer bot where answers are more generic and leave to the customer to figure out exactly what to do next.

If your business has a lot of concepts that are similar to each other in chat messages, or you expect your chatbot to perform precise actions for the user, this simplistic intent to response mapping method may not work well for you in the long run. The chatbot can find it difficult to differentiate between questions like, “Can I apply for No-Fee Savings Account,” “How do I apply for No-Fee Savings Account,” and “Do you offer a savings account that has no fees?”

The Building Blocks of Natural Language Processing

Intents and responses are the building blocks of natural language processing (NLP) science. They are fundamental concepts of how a machine can appear to understand natural language and respond to it.

In the simplest form, you build a classifier that can classify user messages into “intents.” Then you write your business logic to deal with those intents. Sometimes this classification operation is called “intent matching,” “intent detection,” or “intent recognition.” It can be done in many ways from simple keyword matching to machine learning models or deep learning with neural networks.

However, from a data science perspective, intents are just labels. On the chatbot-building platforms, providing sample messages is just another way of performing data annotation with those labels.

Here is an example of how intent to response mapping works:

- You want your chatbot to respond to greetings so you define an intent called “greetings”

- You then add a few sample messages to the intent (e.g. “How are you today?” or “Hello” or “Good morning”)

- You utilize the platform to train your model

- Once the classifier can output the intent “greetings,” you write your business logic to respond with “I’m great, thank you!”

By following this process, you may be able to launch your first iteration of features within a virtual assistant quickly. However, you will run into problems as soon as you try to scale your chatbot to deal with more complicated scenarios and concepts

Problem 1: Increased Intent Density

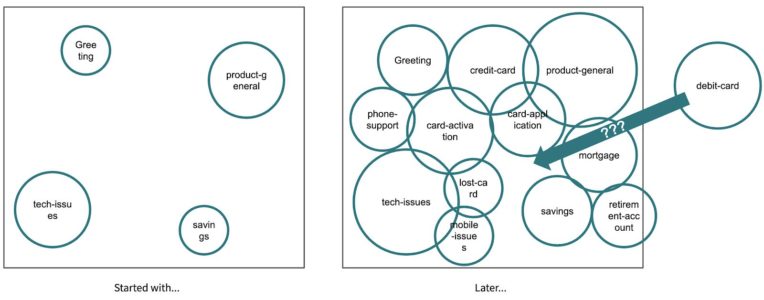

As you add more intents some of them may overlap with existing intents. For example, in a banking chatbot, you may initially have an intent for “apply-credit-cards” that you use to answer generic questions about how to apply for credit cards from one of your knowledge base articles. You trained the chatbot to work quite well on a range of messages like “Do you offer Mastercard?” or “How can I get a Visa Prepaid card?” or even “I need a credit card to pay my bills.”

If you want to add the ability to guide users through different paths of applying for a Mastercard or Visa, you will need to add new intents like “apply-Mastercard” or “apply-visa-card.” You’ll likely want to keep the generic intents for questions about credit cards you don’t provide. However, if your new intents and your old intents are trained on phrases containing “Mastercard” and “visa card,’ your intents may overlap.

Since your responses are directly mapped to the intents, your banking chatbot may respond poorly for ambiguous questions like, “I don’t have either Mastercard or Visa, do you offer any of them?”

Simple intents to response mapping doesn’t work well in a densely-populated intent space because adding more intents usually degrades the existing intents.

In the example above, in the beginning, all intents in the same model had distinct topics such as “apply-savings-account” and “how-to-online-banking.” In an intent space, they are relatively far apart. However, once you add more nuanced intents, the boundaries get closer to one another and often overlap.

In this situation, the model performance will not improve with more incremental annotation and training. Instead, you will likely need to re-annotate and retrain all your original intents. Otherwise, the model performance will degrade over time and responses will become unpredictable.

We see this is one of the biggest reasons why DIY chatbot projects fail to reach production. It is very easy to start an experimental bot but it gets harder and harder to add new features. Project owners eventually hit a wall.

Finn AI’s experiments with popular chatbot-building platforms found the limits within the platform-tools to be as low as 30 to 40 intents. In a deep domain like banking, the number of intents you need to serve real customers should far exceed that limit.

Problem 2: Ripple Effects To Coupled Responses

In a simple intent to the response mapping system, you basically write responses for scenarios defined by each intent. When things are simple, or you don’t have a lot of content for responses, simplicity is an advantage. You enter the messages you trained your intents on and you can expect certain responses back. This allows you to quickly test the chatbot as a black box.

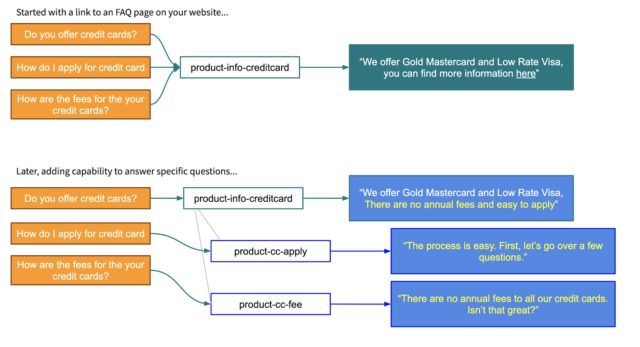

However, when intents become more crowded (as explained in Problem 1), or are split into new granular intents, not only do you need to retrain your original intents, you also need to decide what to do with the responses that are directly mapped to the original intents.

Firstly, you’ll need to replicate the responses and modify them for the new intents (usually with more specific, less generic information or wording). This may require you to rewrite all existing responses—even for the intents you did not touch. This is because the response may be partially covered, or even contradicted, by another intent.

For example, the response to your original intent, “how-to-apply-credit-cards,” may need to change completely to guide the user down different paths. A generic knowledge base article no longer suffices.

One especially troublesome situation arises when your intents are vertically split to cover more depth for one concept rather than horizontally splitting the intent to cover more variations of the same concept.

For example, your generic “apply-online” becomes “apply-online-basic-info,” “apply-online-document,” “apply-online-confirm,” and “apply-online-save-for-later.”

The answer to your original intents will most likely change from giving a generic answer to prompting the user to take further action. In addition to the text of the responses, you’ll also need to deal with other effects such as templated variables, either replicating or replacing them with new ones. This is probably your best option if you want to keep the intents and responses manageable by non-technical teams (most likely your reason for choosing a bot-building platform in the first place).

The other option requires your team to use a complex UI to define the logic to generate a combination of responses or to write code to deal with the complexity. You then lose the ability to have a non-technical team maintaining the chatbot.

Either of these options will increase maintenance costs and complicate your ability to add further sophistication to your banking chatbot in the future.

The Advanced Approach

If intents to response mapping have so many long-term problems, why is it offered as the most popular chatbot-building method? Because chatbot-building platforms are usually generic platforms by nature. They offload the complexity of ensuring the bot’s efficacy to the customer. The main problem is not that the platforms are wrong, it’s that the platform-tools are asking the wrong people to do the wrong job.

Intents are essentially classification labels used to annotate the utterances. The design and use of these labels need knowledgeable data scientists—especially if your domain is narrow and deep, requiring a densely populated intent space.

In narrow, deep domains, you need more sophisticated tools and data science approaches to analyze your intent model performance and design the labels from a data science perspective, instead of by intuition.

At Finn AI, labels are irrelevant. They can very well be UUIDs. We want to avoid people mistaking the label’s name for its actual behavior within the model. For us, labels are a pure data science apparatus.

Instead, the Finn AI system works on the concept of “User Goals” which includes intents, entities, and combinations of other dimensions to define what the user wants to achieve. User goals allow for a more stable business concept for the domain. For example, “user wants to change their password with the mobile app,” or “user wants to check their balance.” These goals can lead to a simple response or can start multi-turn conversations since they decouple the intents from the responses.

Our team of dedicated data scientists and content designers work together to build a banking domain model that consists of hundreds of user goals that accurately understand a densely populated intent space. We have developed our own sophisticated tools to evaluate each intent’s performance in the model every time we run the training. This ensures there are no conflicts and no degradation to the intent model as a whole. The data science team can adjust the intent labels and model independently of the user goals, thus continue to improve the chatbot’s performance without asking the customers to constantly rewrite the hundreds of responses already written and approved by legal and marketing.

Working with stable user goals, our customers also gain a much better understanding of how their chatbot will lead to successful problem resolution and can directly measure ROI based on those user goals.

Our models are built specifically for the banking industry and can go deep on banking topics. It’s fair to say our banking chatbot won’t be able to tell you the latest plant-based meat recipe. But you can be sure it understands the nuances of retail banking out of the box.